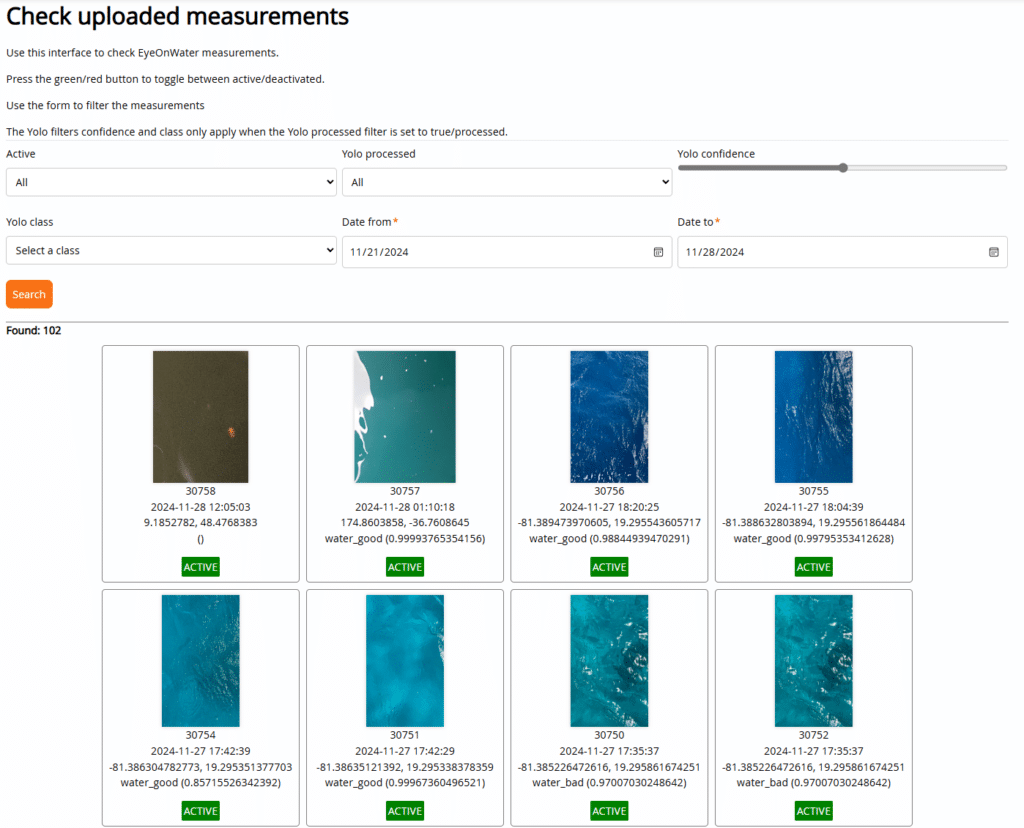

MARIS develops and operates the successful EyeOnWater app, which invites users to make and share images of water surfaces with their smartphones. This helps researchers classify rivers, lakes, coastal waters, seas, and oceans by colour. It can be used for both fresh and saline natural waters. The observations via the app are an extension of a long-term (over 100 years) set of watercolour observations made by scientists in the past.

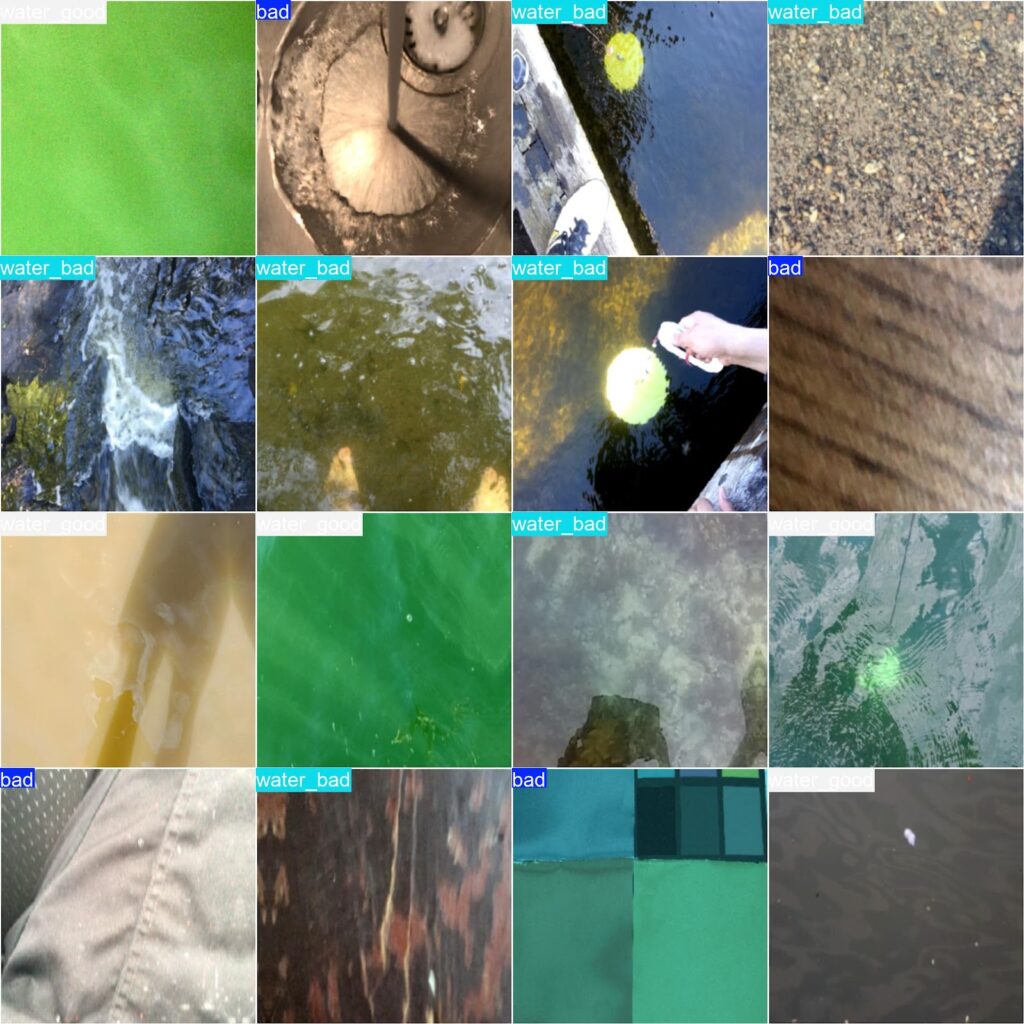

MARIS’s iMagine challenge has been to detect and automatically reject false images using iMagine AI services and approaches.